Last Updated on November 27, 2025 by Bilal Hasdemir

Did you know robot ethics has been around for decades? Before modern robotics and AI, science fiction author Isaac Asimov introduced the Three Laws of Robotics. These laws are key in robot ethics discussions.

Asimov’s laws aim to make robots safe and ethical around humans. His work has shaped how we think about AI and robotics. It shows the importance of ethics in creating machines that think and act like us.

Key Takeaways

- The Three Laws of Robotics were first introduced by science fiction author Isaac Asimov.

- These laws are fundamental principles designed to govern the behavior of robots.

- The laws ensure robots interact safely and ethically with humans.

- Asimov’s work has had a lasting impact on robotics and AI development.

- The Three Laws remain a crucial part of the discussion on robot ethics.

The Origin of the Three Laws of Robotics

In science fiction, Asimov’s Three Laws of Robotics stand out. They first showed up in ‘Runaround’ (1942). These laws have shaped science fiction and influenced our views on robotics and AI.

Isaac Asimov’s Literary Contribution

Isaac Asimov, a famous science fiction writer, created the Three Laws. He wanted to explore the ethics of robots that think and act like humans. Asimov’s work predicted the future and looked at the moral and societal impacts of technology.

His laws were meant to guide robots to act safely and help humanity. Asimov’s work went beyond the laws. He used them to tackle complex ethical issues and the human condition. Through his stories, he showed how the laws work in different situations.

First Appearance in “Runaround” (1942)

The Three Laws debuted in Asimov’s 1942 story “Runaround,” in Astounding Science Fiction. The story features a robot facing a tough choice, showing the laws’ potential challenges. “Runaround” was key for introducing the Three Laws and for showing Asimov’s skill in mixing ethics into stories.

The Three Laws in “Runaround” were a turning point in science fiction. They provided a framework for robot ethics that others would build on. The laws are:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

These laws have become a key part of science fiction. They also spark discussions on AI and robotics ethics.

Understanding the Three Golden Rules of Robots

It’s key to know the Three Golden Rules to understand robotics and AI. Isaac Asimov came up with these rules. They make sure robots act safely and help humans.

The Hierarchical Nature of the Laws

The Three Laws follow a strict order, with the First Law being the most important. This order is key to seeing how robots should act in tricky situations.

The First Law says robots must not harm humans. The Second Law tells robots to follow human orders, unless it goes against the First Law. The Third Law lets robots protect themselves, but only if it doesn’t harm humans or go against the First or Second Law.

Why They Were Created

Asimov made the Three Laws to guide robot ethics. They ensure robots help humans and keep risks low. His work started important talks on robot ethics and AI.

| Law | Description | Precedence |

| First Law | A robot may not harm a human being. | Highest |

| Second Law | A robot must obey human orders unless they conflict with the First Law. | Medium |

| Third Law | A robot must protect its own existence unless it conflicts with the First or Second Law. | Lowest |

In summary, the Three Golden Rules of Robots are essential for robot ethics. They set a clear order that puts human safety first. Robots must follow human orders and protect themselves, but only if it doesn’t harm humans.

First Law: A Robot May Not Harm a Human

Isaac Asimov laid the groundwork for robotic ethics with the First Law. It states that “A robot may not injure a human being or, through inaction, allow a human being to come to harm.” This rule is key to making sure robots keep humans safe.

Complete Text and Interpretation

The First Law is clear: robots must not harm humans. This includes not causing harm by action or by not acting when needed. It’s foundational because it sets a solid ethical standard for robots.

Asimov’s First Law has sparked a lot of debate and study. It’s seen as a cornerstone in robotic ethics. It has shaped both science fiction and real-world robotics.

“A robot may not injure a human being or, through inaction, allow a human being to come to harm.”

The Concept of “Harm” in Robotics

The idea of “harm” is complex. In robotics, it’s not just about physical harm. It also includes psychological or emotional harm. It’s a big challenge to program robots to avoid all types of harm.

Researchers and developers face a big task. They must think about all the ways a robot could cause harm. This includes direct harm, indirect harm, and harm by not acting.

- Direct harm: Physical injury caused by a robot’s actions.

- Indirect harm: Psychological or emotional distress resulting from a robot’s behavior.

- Harm through inaction: Failure to act in a way that prevents harm to a human.

By understanding these types of harm, developers can design safer, more ethical robots. This ensures robots operate in a way that respects human safety.

Second Law: Obeying Human Orders

Robots are made to follow human orders, thanks to the Second Law. This law is key in how robots and humans interact. It covers simple tasks and complex decisions.

Limitations and Conditions

The Second Law says robots must obey unless it could harm a human. If there’s a risk, the robot won’t follow the order. This keeps humans safe.

Key considerations include understanding the intent behind a command and evaluating potential outcomes. For instance, if a human instructs a robot to perform a task that could indirectly cause harm, the robot must be able to recognize this and refrain from executing the task.

Conflicts with the First Law

Conflicts between the First and Second Laws can happen in complex situations. For example, if a human tells a robot to harm another human, the robot faces a tough choice. It must decide between obeying the human or protecting the other human.

Resolving such conflicts needs smart programming and ethics. This shows why we need advanced AI to handle these tough choices.

In conclusion, the Second Law of Robotics is crucial for robot behavior, especially when following human commands. Knowing its limits and conflicts with the First Law helps us create robots that are both obedient and ethical.

Third Law: Robot Self-Preservation

The Third Law lets robots protect themselves as long as it doesn’t harm humans or disobey orders. This rule is key to seeing how robots balance their own needs with keeping humans safe and following commands.

Balancing Self-Protection with Human Safety

The Third Law is made to follow the First and Second Laws. This means a robot’s own safety can’t come before human safety or disobeying orders. This structure is vital in robotic ethics, stopping robots from putting themselves first over human needs.

In simple terms, a robot can act to save itself, like avoiding danger, but only if it doesn’t hurt humans or go against its orders.

Hierarchical Subordination to Laws 1 and 2

Making the Third Law follow the first two is a smart choice. It shows that keeping humans safe and following orders are more important. This makes sure robots are helpful and safe for humans, not just acting on their own.

Knowing this order helps developers make robots that not only follow rules but also understand their actions’ impact on humans and their interactions.

The Zeroth Law: Later Addition

Isaac Asimov introduced the Zeroth Law. It changed how we think about robotic ethics. Now, robots must protect humanity as a whole, not just individual people.

The Zeroth Law marked a big change in Asimov’s robotic ethics. It moved from just protecting individual humans to considering the safety of all humanity.

Protecting Humanity as a Whole

The Zeroth Law says robots must not harm humanity. It also means they can’t do nothing when humanity is in danger. This law makes protecting humanity the top priority, even if it means going against the first three laws.

Ethical Implications of the Zeroth Law

The Zeroth Law makes us think about the balance between protecting one person and protecting everyone. It makes robots consider the greater good, not just individual safety. This adds a new layer to how robots make decisions.

This law also brings up conflicts with the original three laws. It shows we need to understand how robots handle these tough choices.

Philosophical Foundations of the Three Laws

Understanding the Three Laws’ philosophy is key to seeing their impact on robot ethics and AI. Isaac Asimov created these laws, focusing on ethics that affect both humans and robots.

Ethical Frameworks Behind the Laws

The Three Laws of Robotics are based on ethics that consider how robots act towards humans. They draw from utilitarianism and deontology, guiding robot behavior.

Utilitarianism looks at the outcomes of actions, aiming for the highest happiness. For the Three Laws, this means robots should act to avoid harm and benefit everyone.

Deontology values rules and duties over outcomes. It sees some actions as right or wrong, regardless of results. The Three Laws follow this, making robots act based on strict rules.

Utilitarianism vs. Deontology in Robot Ethics

The debate between utilitarianism and deontology is key in robot ethics. Utilitarianism might justify actions for the greater good, even if they harm some. Deontology, however, sticks to rules, making robots more predictable and reliable.

- Utilitarian Considerations: Maximizing overall well-being, minimizing harm.

- Deontological Considerations: Adhering to moral rules, duties, and obligations.

The Three Laws mix both ethics, showing the balance between robot actions and moral rules.

Looking into the Three Laws’ philosophy helps us understand the ethics of robotics and AI. This knowledge is vital for tackling the challenges and benefits of advanced robots and AI.

Criticisms and Limitations of Asimov’s Laws

Asimov’s Three Laws have shaped robotics but face criticism. They have logical flaws and struggle with defining “human” and “harm.”

Logical Paradoxes and Contradictions

The Three Laws are open to logical paradoxes and contradictions. For example, a robot might be asked to do something that could harm a human. This creates a problem where the robot can’t act without breaking a law.

Example of a Logical Paradox: Imagine a robot is told to deliver a package with a harmful substance to a human. The robot must choose between following orders and not harming humans, leading to a conflict.

The Problem of Defining “Human” and “Harm”

Defining “human” and “harm” is a big challenge. The laws don’t clearly say what makes someone human or what harm is unacceptable.

Defining “Human”: “Human” can mean different things, like biological or legal. Robots might find it hard to tell who is human, especially in tricky situations.

| Concept | Definition Challenge | Impact on Robotics |

| Human | Biological, legal, and social interpretations | Robots may misidentify humans or struggle with nuanced definitions |

| Harm | Extent and context of harm (physical, emotional, etc.) | Robots may not fully understand the implications of their actions on humans |

The flaws in Asimov’s Three Laws show how hard it is to make robots ethical. It’s key to understand these issues to create better ethics for robots and AI.

Modern Interpretations in AI Development

Today, Asimov’s Laws are back in AI development. They help make robots and AI systems safe and ethical. This is because we need strong rules for how humans and AI interact.

Contemporary Views on the Laws

AI experts see Asimov’s Laws as a starting point for AI ethics. These laws, from science fiction, guide our thinking on AI safety. They help us understand the ethics of making machines that can act on their own.

Key Considerations:

- Adapting the laws to fit the nuances of modern AI technology.

- Addressing the limitations and potential contradictions within the laws.

- Exploring how the laws can be integrated into AI development processes.

Practical Applications in AI Safety

Asimov’s Laws are now part of AI safety. They help prevent harm to humans and ensure robots follow commands safely. These rules are used in many AI systems, like self-driving cars and robots.

Case Study: In self-driving cars, the First Law is key. It makes sure these cars always put human safety first. This means they use smart algorithms to avoid accidents.

| Law | Original Purpose | Modern Application |

| First Law | Prevent robots from harming humans. | Implementing safety protocols in AI systems to prevent harm. |

| Second Law | Ensure robots obey human commands. | Developing AI that follows instructions while ensuring safety. |

| Third Law | Protect the robot’s existence. | Balancing self-preservation with human safety in AI decision-making. |

The table shows how Asimov’s Laws are updated for today’s AI. They focus on safety, following orders, and self-preservation. This fits with today’s tech.

Programming the Three Golden Rules of Robots

Robots are now part of our daily lives. It’s key to program them to follow the Three Laws of Robotics for safe interactions. This task needs a mix of robotics, ethics, and laws about human interaction.

Technical Approaches to Implementing Ethical Guidelines

To make robots follow the Three Laws, we need smart algorithms. These algorithms must understand and apply these rules in real life. This calls for advanced machine learning techniques and robust decision-making frameworks.

Some ways to do this include:

- Creating algorithms that can sense and meet human emotions and needs.

- Building frameworks that always put human safety first.

- Setting up feedback systems for robots to learn and get better over time.

Case Studies of Ethics in Robot Programming

There are many examples of the ups and downs in robot programming. For example:

- A study on self-driving cars showed how to balance safety with efficiency.

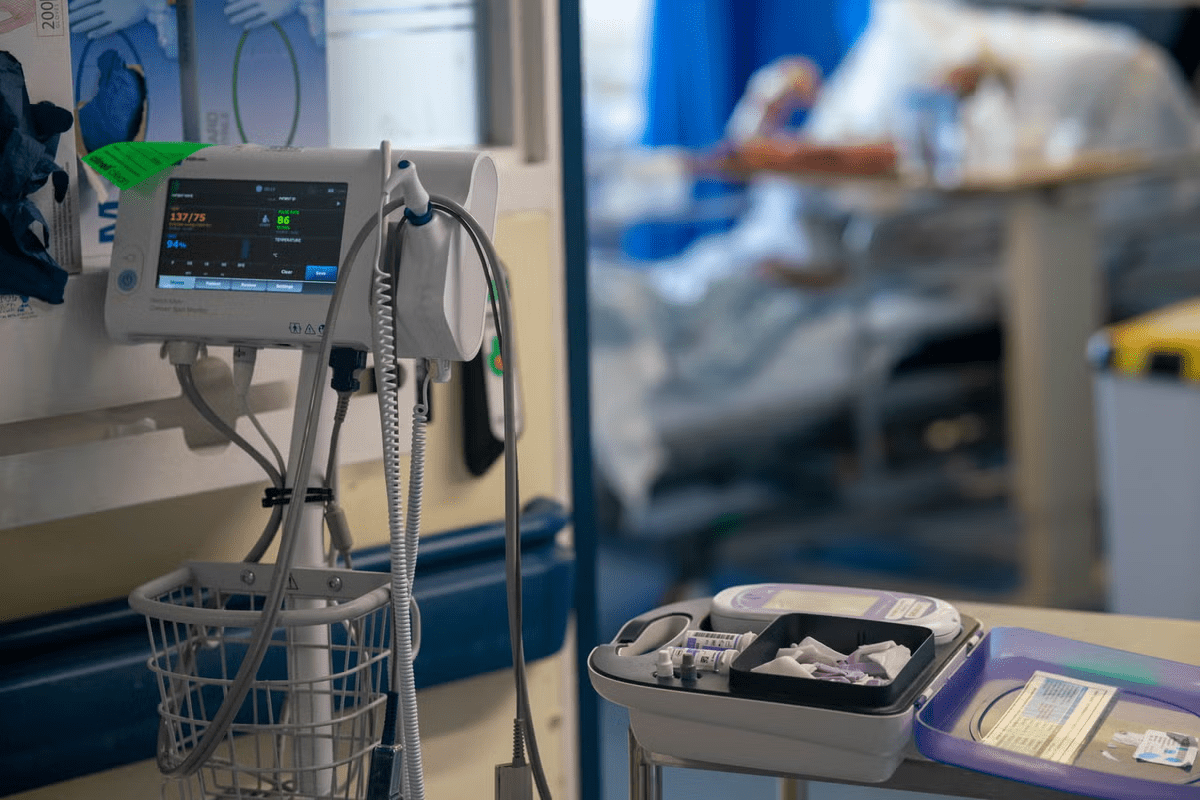

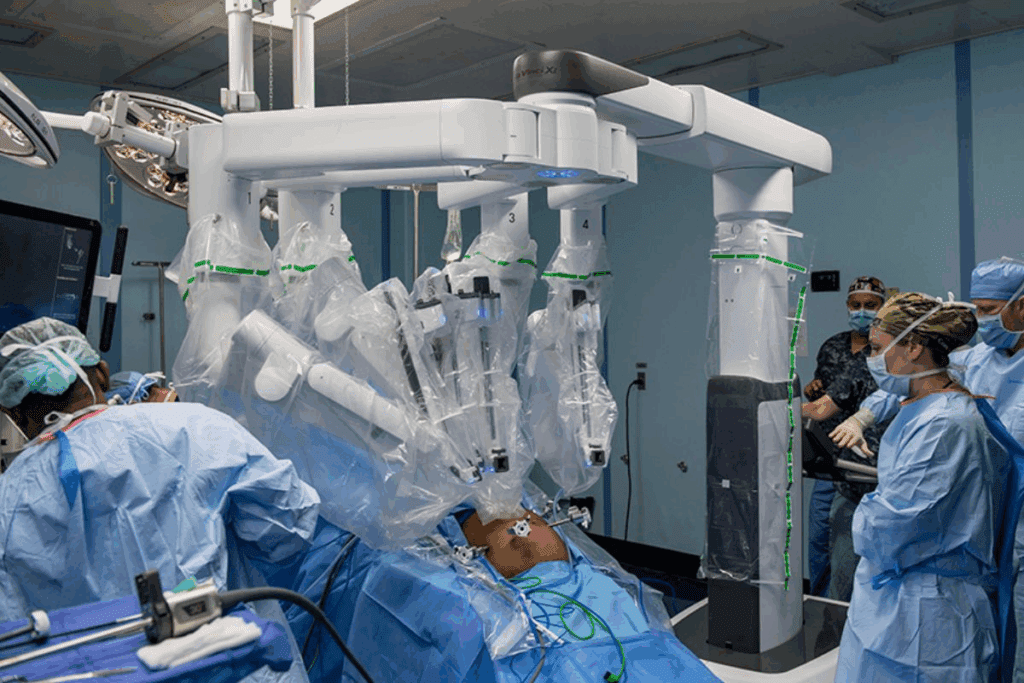

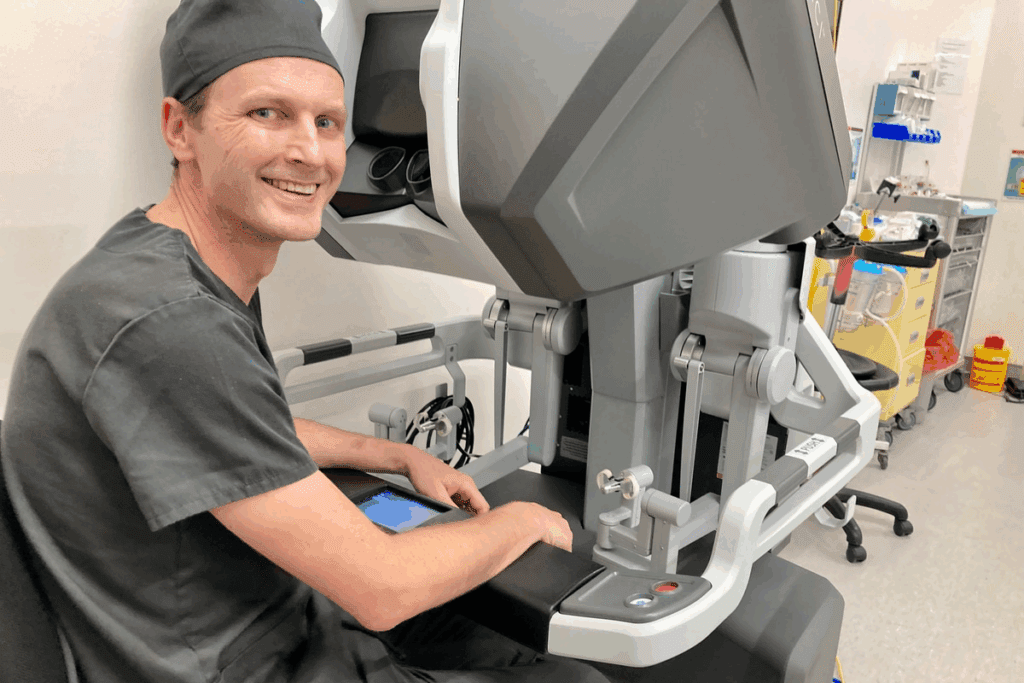

- Research on robots in healthcare showed how they can keep patients safe and private.

- Tests with robots in rescue missions showed the Third Law’s importance in helping humans.

These examples show how crucial it is to understand the context and be flexible in programming. As robotics grows, we’ll need better ethical rules and programming methods for safe human-robot interactions.

Real-World Implementation Challenges

Adding the Three Laws to robots is tough. As robots join our daily lives, making sure they act right is key.

Technical Barriers to Programming Ethical Rules

Turning the Three Laws into robot code is hard. Robots need clear orders, but ethics are tricky. Figuring out what’s “harm” can be tough.

Programming ethical rules needs smart algorithms. These algorithms must handle unclear or mixed commands. Scientists are looking into machine learning to help robots learn and adapt.

The Complexity of Human-Robot Interaction

Interacting with humans is complex for robots. They must grasp human intentions and actions, which can be hard to read. Effective communication is vital to avoid harm.

“The ability of robots to understand and respond appropriately to human behavior is critical for safe and effective interaction.”

Creating robots that get human interactions right is a big task. It’s not just about programming. It’s also about understanding people’s minds and social ways.

To tackle these hurdles, experts are working on advanced AI. They aim to make robots that can handle complex human actions and make good choices quickly. The dream is for robots to work well and safely everywhere.

Cultural Impact of the Three Laws

The Three Laws of Robotics have made a lasting impact on science fiction and beyond. They influence popular culture and shape how people view robotics and AI.

Influence on Science Fiction and Popular Culture

Asimov’s laws have been key in science fiction, shaping many stories and characters. They’ve appeared in books, movies, and TV shows.

Science Fiction Examples

- Isaac Asimov’s own works, such as “I, Robot”

- Films like “I, Robot” (2004) and “A.I. Artificial Intelligence” (2001)

- TV series such as “Star Trek: The Next Generation”

Popular Culture often references the Three Laws, sparking discussions on robotics and AI ethics.

Shaping Public Perception of Robotics

The Three Laws have greatly shaped public views on robotics and AI. They serve as a cultural reference point for AI ethics.

| Aspect | Influence | Public Perception |

| Science Fiction | Narratives and character development | Shapes expectations of AI capabilities |

| Popular Culture | Ethical dilemmas and references | Influences ethical discussions around AI |

| Robotics Development | Guiding principles for ethical AI | Affects trust in AI systems |

The lasting impact of the Three Laws shows their ongoing relevance in science fiction and broader cultural discussions on robotics and AI.

Alternative Ethical Frameworks for Robots

In the world of robotics, there’s a big push for better ethical rules. As AI and robotics get smarter, we need clear and flexible ethics more than ever.

Asimov’s laws are just the start. Now, experts are looking into virtue ethics, care ethics, and more. They want to help robots make good choices.

Beyond Asimov: Modern Robot Ethics

Asimov’s laws have their limits. So, the robotics world is exploring new ways to guide robots. Principles-based ethics is one approach, aiming for clear rules in tricky situations.

Another big step is teaching robots about human values. This means programming them to respect and understand human ethics. It helps them act right in many different situations.

Emerging Standards and Guidelines

Creating standards and guidelines for robotics ethics is a growing effort. Groups and rules makers are teaming up to make rules that everyone can follow.

Some new rules include:

- Being clear about how AI makes decisions

- Being accountable for what robots do

- Making sure robots are safe and secure

These rules aim to tackle the big ethical questions in robotics and AI. They help make sure robots are good for society.

As robotics and AI keep getting better, we’ll likely see these ethics get even better. This will shape the future of these technologies.

Conclusion

The Three Laws of Robotics, created by Isaac Asimov, have been key in robot ethics and AI. They have been looked at closely, shaping not just science fiction but also real-world robotics and AI ethics.

The future of robot ethics relies on how well we can apply these laws in AI systems. As robotics and AI grow, we need strong ethical rules. The Three Laws are a start, but we must keep researching and improving to handle the challenges of human-robot interaction and AI decisions.

In summary, the Three Laws of Robotics are a good starting point. But, the future of robot ethics will depend on our ability to update and improve these rules. As we progress, it’s vital to mix insights from different ethical views and keep checking the effects of robotics and AI ethics. This will help make sure these technologies are good for everyone.

FAQ

What are the Three Laws of Robotics?

Isaac Asimov introduced the Three Laws of Robotics. They guide robots to act safely and ethically around humans. The laws are: a robot may not harm a human, a robot must obey human orders, and a robot must protect its own existence.

Who introduced the concept of the Three Laws of Robotics?

Isaac Asimov, a science fiction author, introduced the Three Laws of Robotics in his 1942 short story “Runaround.”

What is the significance of the hierarchical structure of the Three Laws?

The Three Laws’ structure is key. It puts human safety first, ensuring robots act in line with human values.

How does the First Law relate to the concept of “harm” in robotics?

The First Law forbids robots from harming humans. It means robots must avoid causing harm and prevent it when they can.

What are some criticisms of Asimov’s Three Laws?

Critics say the laws have logical flaws. They also find it hard to define “human” and “harm.” Plus, turning the laws into robot actions is a challenge.

How are the Three Laws applied in modern AI development?

Today, AI developers use the Three Laws to create safety protocols. They focus on keeping humans safe and happy.

What are some technical challenges in programming robots to follow the Three Laws?

Programmers face many challenges. They must define the laws in real-world scenarios and handle conflicts. Robots also need to understand human needs.

How have the Three Laws influenced science fiction and popular culture?

The Three Laws have shaped science fiction and culture. They’ve influenced how we see robots and AI, inspiring many stories about AI ethics.

Are there alternative ethical frameworks for robots beyond Asimov’s Three Laws?

Yes, there are other ethical frameworks for robots. Modern ethics and guidelines address the complexities of robotics and AI.

What is the Zeroth Law, and how does it relate to the original Three Laws?

Asimov later introduced the Zeroth Law. It says a robot may not harm humanity. This law takes priority, focusing on humanity’s well-being over individual safety.

Reference:

Cambridge University Hospitals NHS Foundation Trust (CUH)

https://www.cuh.nhs.uk/patient-information/bladder-care-and-management